Will Supercapacitors Come to AI's Rescue?

Cars That Think

MAY 6, 2025

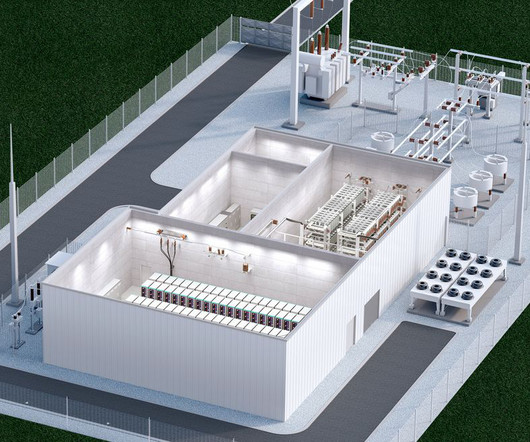

Because training is orchestrated simultaneously among many thousands of GPUs in massive data centers, and with each new generation of GPU consuming an ever-increasing amount of power, each step of the computation corresponds to a massive energy spike. This makes the grid see a consistent load, but it also wastes energy doing unnecessary work.

Let's personalize your content