The next great chatbot will run at lighting speed on your laptop PC—no Internet connection required.

That was at least the vision recently laid out by Intel’s CEO, Pat Gelsinger, at the company’s 2023 Intel Innovation summit. Flanked by on-stage demos, Gelsinger announced the coming of “AI PCs” built to accelerate all their increasing range of AI tasks based only on the hardware beneath the user’s fingertips.

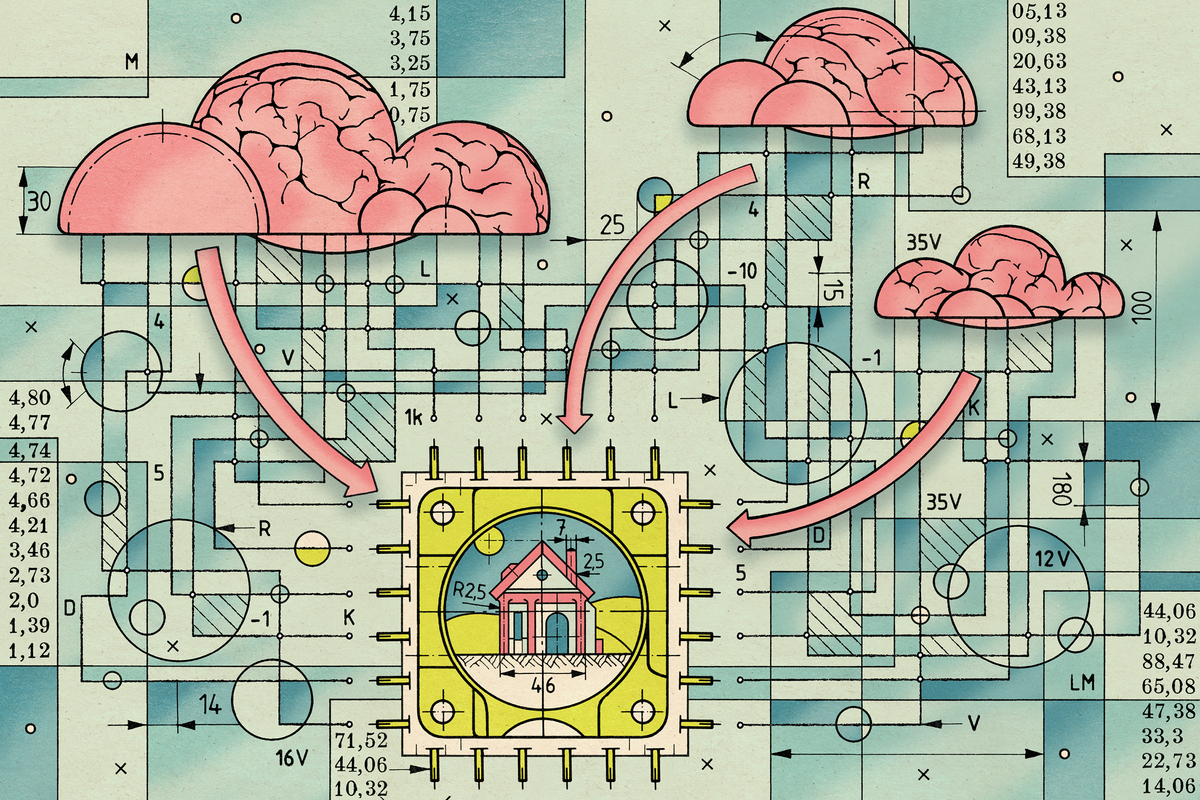

Intel’s not alone. Every big name in consumer tech, from Apple to Qualcomm, is racing to optimize its hardware and software to run artificial intelligence at the “edge”—meaning on local hardware, not remote cloud servers. The goal? Personalized, private AI so seamless you might forget it’s “AI” at all.

The promise was AI would soon revolutionize every aspect of our lives, but that dream has frayed at the edges.

“Fifty percent of edge is now seeing AI as a workload,” says Pallavi Mahajan, corporate vice president of Intel’s Network and Edge Group. “Today, most of it is driven by natural language processing and computer vision. But with large language models (LLMs) and generative AI, we’ve just seen the tip of the iceberg.”

With AI, cloud is king—but for how long?

2023 was a banner year for AI in the cloud. Microsoft CEO Satya Nadella raised a pinky to his lips and set the pace with a US $10 billion investment into OpenAI, creator of ChatGPT and DALL-E. Meanwhile, Google has scrambled to deliver its own chatbot, Bard, which launched in March; Amazon announced a $4 billion investment in Anthropic, creator of ChatGPT competitor Claude, in September.

“The very large LLMs are too slow to use for speech-based interaction.”

—Oliver Lemon, Heriot-Watt University, Edinburgh

These moves promised AI would soon revolutionize every aspect of our lives, but that dream has frayed at the edges. The most capable AI models today lean heavily on data centers packed with expensive AI hardware that users must access over a reliable Internet connection. Even so, AI models accessed remotely can of course be slow to respond. AI-generated content—such as a ChatGPT conversation or a DALL-E 2–generated image—can stall out from time to time as overburdened servers struggle to keep up.

Oliver Lemon, professor of computer science at Heriot-Watt University, in Edinburgh, and colead of the National Robotarium, also in Edinburgh, has dealt with the problem firsthand. A 25-year veteran in the field of conversational AI and robotics, Lemon was eager to use the largest language models for robots like Spring, a humanoid assistant designed to guide hospital visitors and patients. Spring seemed likely to benefit from the creative, humanlike conversational abilities of modern LLMs. Instead, it found the limits of the cloud’s reach.

“[ChatGPT-3.5] was too slow to be deployed in a real-world situation. A local, smaller LLM was much better. My impression is that the very large LLMs are too slow to use for speech-based interaction,” says Lemon. He’s optimistic that OpenAI could find a way around this but thinks it would require a smaller, nimbler model than the all-encompassing GPT.

Spring instead went with Vicuna-13B, a version of Meta’s Llama LLM fine-tuned by researchers at the Large Model Systems Organization. “13-B” describes the model’s 13 billion parameters, which, in the world of LLMs, is small. The largest Llama models encompass 70 billion parameters, and OpenAI’s GPT-3.5 contains 175 billion parameters.

Reducing the parameters in a model makes it less expensive to train, which is no small advantage for researchers like Lemon. But there’s a second, equally important benefit: quicker “inference”—the time required to apply an AI model to new data, like a text prompt or photograph. It’s a must-have for any AI assistant, robotic or otherwise, meant to help people in real time.

Local inference acts as a gatekeeper for something that’s likely to become key for all personalized AI assistants: privacy.

“If you look into it, the inferencing market is actually much bigger than the training market. And an ideal location for inferencing to happen is where the data is,” says Intel’s Mahajan. “Because when you look at it, what is driving AI? AI is being driven by all the apps that we have on our laptops or on our phones.”

Edge performance means privacy

One such app is Rewind, a personalized AI assistant that helps users recall anything they’ve accomplished on their Mac or PC. Deleted emails, hidden files, and old social media posts can be found through text-based search. And that data, once recovered, can be used in a variety of ways. Rewind can transcribe a video, recover information from a crashed browser tab, or create summaries of emails and presentations.

Mahajan says Rewind’s arrival on Windows is an example of its open AI development ecosystem, OpenVINO, in action. It lets developers call on locally available CPUs, GPUs, and neural processing units (NPUs) without writing code specific to each, optimizing inference performance for a wide range of hardware. Apple’s Core ML provides developers a similar toolset for iPhones, iPads, and Macs.

“With Web-based tools, people were throwing information in there…. It’s just sucking everything in and spitting it out to other people.”

—Phil Solis, IDC

And quick local inference acts as a gatekeeper for a second goal that’s likely to become key for all personalized AI assistants: privacy.

Rewind offers a huge range of capabilities. But, to do so, it requires access to nearly everything that occurs on your computer. This isn’t unique to Rewind. All personalized AI assistants demand broad access to your life, including information many consider sensitive (like passwords, voice and video recordings, and emails).

Rewind combats security concerns by handling both training and inference on your laptop, an approach other privacy-minded AI assistants are likely to emulate. And by doing so, it demonstrates how better performance at the edge directly improves both personalization and privacy. Developers can begin to provide features once possible only with the power of a data center at their back and, in turn, offer an olive branch to those concerned about where their data goes.

Phil Solis, research director at IDC, thinks this is a key opportunity for on-device AI to ripple across consumer devices in 2024. “Support for AI and generative AI on the device is something that’s a big deal for smartphones and for PCs,” says Solis. “With Web-based tools, people were throwing information in there…. It’s just sucking everything in and spitting it out to other people. Privacy and security are important reasons to do on-device AI.”

Unexpected intelligence on a shoestring budget

Large language models make for superb assistants, and their capabilities can reach into the more nebulous realm of causal reasoning. AI models can form conclusions based on information provided and, if asked, explain their thoughts step-by-step. The degree to which AI understands the result is up for debate, but the results are being put into practice.

Qualcomm’s new Snapdragon chips, soon to arrive in flagship phones, can handle Meta’s powerful Llama 2 LLM entirely on your smartphone, no Internet connection or Web browsing required.

The startup Artly uses AI in its barista bots, Jarvis and Amanda, which serve coffee at several locations across North America (it makes a solid cappuccino—even by the scrupulous standards of Portland, Oregon’s coffee culture). The company’s cofounder and CEO, Meng Wang, wants to use LLMs to make its fleet of baristas smarter and more personable.

“If the robot picked up a cup and tilted it, we would have to tell it what the result would be,” says Wang. But an LLM can be trained to infer that conclusion and apply it in a variety of scenarios. Wang says the robot doesn’t run all inference on the edge—the barista requires an online connection to verify payments, anyway—but it hides an Nvidia GPU that handles computer-vision tasks.

This hybrid approach shouldn’t be ignored: in fact, the Rewind app does something conceptually similar. Though it trains and runs inference on a user’s personal data locally, it provides the option to use ChatGPT for specific tasks that benefit from high-quality output, such as writing an email.

But even devices forced to rely on local hardware can deliver impressive results. Lemon says the team behind SPRING found ways to execute surprising intelligence even within the restraints of a small, locally inferenced AI model like Vicuna-13B. Its reasoning can’t compare to GPT, but the model can be trained to use contextual tags that trigger prebaked physical movements and expressions that show its interest.

The empathy of a robot might seem niche compared to “AI PC” aspirations, but performance and privacy challenges that face the robot are the same that face the next generation of AI assistants. And those assistants are beginning to arrive, albeit in more limited, task-specific forms. Rewind is available to download for Mac today (and will soon be released for Windows). The new Apple Watch uses a transformer-based AI model to make Siri available offline. Samsung has plans to bake NPUs into its new home-appliance products starting next year. And Qualcomm’s new Snapdragon chips, soon to arrive in flagship phones, can handle Meta’s powerful Llama 2 LLM entirely on your smartphone, no Internet connection or Web browsing required.

“I think there has been a pendulum swing,” says Intel’s Mahajan. “We used to be in a world where, probably 20 years back, everything was moving to the cloud. We’re now seeing the pendulum shift back. We are seeing applications move back to the edge.”

This article appears in the February 2024 print issue as “Generative AI Slims Down for a Portable World.”

- Developing the Next Generation of AI Assistant ›

- Cybercrime Meets ChatGPT: Look Out, World ›

- At CES 2024, AI Is Here to Help - IEEE Spectrum ›

Matthew S. Smith is a freelance consumer technology journalist with 17 years of experience and the former Lead Reviews Editor at Digital Trends. An IEEE Spectrum Contributing Editor, he covers consumer tech with a focus on display innovations, artificial intelligence, and augmented reality. A vintage computing enthusiast, Matthew covers retro computers and computer games on his YouTube channel, Computer Gaming Yesterday.