In the past two years, the U.S. Congress has provided hundreds of billions of dollars to speed the deployment of clean-energy technologies. These investments are one reason why the International Energy Agency (IEA) in September insisted that there’s still hope to hold global temperature rise to 1.5 °C in this century.

Thousands of Washington insiders and climate activists have had a hand in these legislative breakthroughs. Among the most articulate and almost certainly the wonkiest is Jesse Jenkins, a professor of engineering at Princeton University, where he heads the ZERO Lab—the Zero-carbon Energy systems Research and Optimization Laboratory, that is.

In 2021 and 2022, during the high-stakes negotiations over what became the Infrastructure Investment and Jobs Act and the Inflation Reduction Act, the ZERO Lab and the San Francisco–based consultancy Evolved Energy Research operated a climate-modeling war room that provided rapid-fire analyses of the likely effects of shifting investments among a smorgasbord of clean-energy technologies. As legislation worked its way through Congress, Jenkins’s team provided elected officials, staffers, and stakeholders with a running tally of the possible trade-offs and payoffs in emissions, jobs, and economic growth.

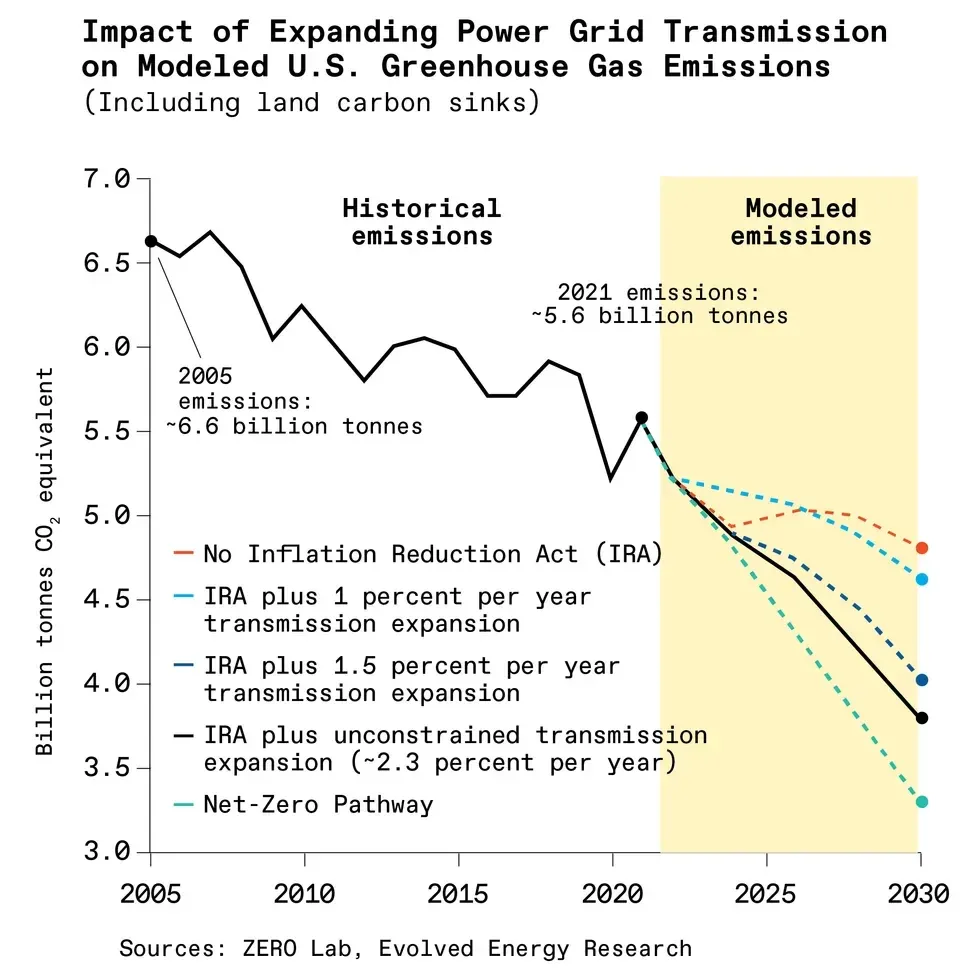

Jenkins has also helped push Congress to think more seriously about the power grid, releasing a report last year that showed that much of the 43 percent emissions reduction expected by 2030 would be squandered if the United States doesn’t double the pace of transmission upgrades.

As TheWall Street Journal noted in a July 2023 profile, Jenkins has played an “outsized role” in determining where federal cash can have the biggest impact, and politicos like White House clean-energy advisor John Podesta name-drop the professor and his numbers to sell their ideas.

IEEE Spectrum contributing editor Peter Fairley recently spoke with Jenkins via Zoom about where the U.S. energy system needs to go and how the latest energy models can help.

Jesse Jenkins on:

- How REPEAT influenced Congress

- How energy-system modeling has evolved

- Why energy modeling is useful

- The value of open-source energy models

- The importance of power grid expansion

- Climate resiliency for the grid

- The role of HVDC transmission

- How the U.S. can meet its emissions goals

The Rapid Energy Policy Evaluation and Analysis Toolkit—REPEAT—which you developed at Princeton with Evolved Energy Research, influenced Congress to create massive incentives for clean-energy tech. How did REPEAT come together?

Jesse Jenkins: In early 2021, given the results of the U.S. presidential election, it seemed that we were entering one of those rare windows where you might see substantial policy action on climate and clean energy.

The U.S. government was going to try a whole bunch of different government interventions—incentive programs, tax credits, grants, infrastructure investments—to bend the trajectory of our energy transition. We realized that as the policy was coming into shape, it was going to be difficult to understand its aggregate impact.

So we decided to launch REPEAT in the spring of 2021, with funding from the Hewlett Foundation. We threw in real policies as they were being proposed and debated in Congress, to provide as close to real-time analysis as possible as to the likely impact of the legislation. We did that throughout the debate on the bipartisan infrastructure bill [which became the Infrastructure Investment and Jobs Act]and the Inflation Reduction Act.

I think that, along with similar efforts by consultancies like the Rhodium Group and Energy Innovation, we provided important real-time information for stakeholders inside and outside the negotiations as to what its likely impact would be and whether it was strong enough. It’s similar to how the Congressional Budget Office tries to score the budgetary impact of legislation as it’s being debated. Those estimates are always wrong, but they’re better than having no estimate. And we were much more transparent than CBO is. They don’t tell you how they come up with their numbers.

How has energy-system modeling evolved to make the detailed simulations and projections like REPEAT’s possible?

Jenkins: Energy systems became globalized in the middle of the 20th century and then encountered global supply shocks, like the oil embargoes of the ’70s. These are complex systems, so it’s hard to predict exactly how an intervention at one point is going to affect everything else. Energy-system models that marry engineering, physics, economics, and policy constraints and concerns allow us to test assumptions, explore actions, and build intuitions about how those systems work.

I entered the field in the mid-2000s, motivated by climate concerns, and I encountered a whole range of questions about the role of emerging technologies, potential policies to reshape our energy systems, and the implications of energy transitions. The tools built in the ’70s and ’80s were not cut out for that. So there’s been quite a flurry of activity from the 2010s on to build a new generation of modeling tools, fit for the energy challenges that we face now.

“The best we can do is to build tools that allow us to explore possible futures.” —Jesse Jenkins, Princeton University

When I entered the field, commercial wind was starting to scale up and the questions were about engineering feasibility. What was the maximum share of wind that we could have in the system without blowing it up—5 percent or 20 percent or 30 percent? How fast can you ramp your power plants up and down to handle the variability from wind and solar?

Now the questions are much more about implementation, about the pace of the energy transition that’s feasible, and the distribution of the benefits and impacts. That’s demanding that the models go beyond stylized representations of how and where stuff gets built, so that those considerations get embedded right into the modeling practice.

Until recently, energy modeling by the U.S. Energy Information Administration (EIA) and IEA vastly underprojected wind and solar deployments. What about the pitfalls with energy modeling?

Jenkins: These are decision-support tools, not decision-making tools. They cannot give you the answer. In fact, we shouldn’t even think of these models as predictive. We say that the IEA makes projections. Well, they’re really making a scenario that’s internally consistent with a set of assumptions. That “prediction” is only as good as the assumptions that go into it, and those assumptions are challenging. We’re not talking about a physical phenomenon that I can repeatedly observe in an experiment and derive the equations for and know will hold forever, like gravity or the strong nuclear force. We’re trying to project a dynamically changing system involving deep uncertainties where you cannot resolve the probability distribution or even the range of possible outcomes.

We face deep uncertainties because we’re talking about policies that will shape capital investments that will live for 20 or 30 years or longer. If you ask a bunch of experts to predict the cost of a technology 10 years from now, they’re all over the map—9 out of 10 are wrong, and you don’t know which one is right. There’s just so much that is contingent and unknowable. The best we can do is to build tools that allow us to explore possible futures, to build intuition about the consequences of different actions under different assumptions, and to hope that that helps us make better decisions than if we were simply ignorant.

I think the models do succeed and are helping us understand, on a broad scale, the potential implications of energy-system decision making. There may be 30 things that we care about, but maybe five of them are the most important and the other ones we can sort of disregard as second- or third-order concerns. I can’t tell you exactly what the outcome will be for those five parameters. But I can tell you, “These are the ones you want to watch out for, and you want to plan a strategy that is hedged against those five key indicators.”

Sounds like increasingly you must model how society works.

Jenkins: We must at least be able to speak to society’s concerns, beyond just “Do the lights stay on?” and “Is your electricity bill reasonably affordable?” Those are important concerns, but they’re not the only concerns. The Net-Zero America study that we put out towards the end of 2020 and updated in 2021 was a big effort at Princeton. Our team of about 16 people went beyond the high-level question of “What does a pathway to net-zero look like?” to answer “What needs to get built around the United States when, and under what conditions, to actually deliver on what the model says makes sense?”

That required us to go sector by sector and develop techniques for what we call downscaling. If the model wants to have this much capital investment appear at these points in time, there’s a whole process that precedes that, where businesses develop projects, abandon some of them, move forward with others, get regulatory approval for some while others are blocked. You put development capital at risk, you have a certain timeline and success rate, and then construction takes so many years.

So we sort of “backwards plan” from when the model wants things online to get a sense of the types of capital that you need to mobilize at different stages. We did downscaling of where you would build all the wind and solar generation that the model suggested. And then you start to see how siting these resources trades off against other land use or conservation priorities, and who’s going to bear the impacts and gain benefits—such as local tax revenue and jobs versus seeing wind turbines all around your community.

Spectrumreported about a push in Europe and some U.S. states to require use of nonproprietary models by utilities and technology developers seeking public funding, to increase transparency and to involve a wider range of people. Do you see big pluses, and any minuses, to open-source modeling?

We’ve been working on this quite concertedly for many years now. Especially in regulatory proceedings and in efforts to shape policy decision-making modeling, the data going in should be open.

My first job out of college was at Renewable Northwest, which is a regional renewable energy advocacy group that operates in the northwest states and intervenes in state regulatory proceedings. I engaged in integrated resource planning for the two investor-owned utilities in Oregon—PGE and Pacific Power. Their models provided a range of scenarios meant to let the public and stakeholders interrogate their assumptions and to get answers and to push them to try different things. But those models were entirely proprietary. There was no way to understand how they worked or try them out. Much of the data was made available, but some was redacted for various competitive concerns.

And Oregon is pretty transparent. In other states, utilities submit a document where 90 percent of it is redacted. And there are states like Georgia where public interveners don’t have any right to discovery. That really creates an information asymmetry that benefits the utility to the detriment of both the regulatory staff and public interveners and stakeholders.

So I had this experience where I couldn’t get under the hood and understand how the model worked and propose alternative strategies. So when I went to MIT to do my Ph.D., I and Nestor Sepulveda, who was also a Ph.D. candidate, built the GenX electricity-system planning model. We wanted to build a tool that was kind of a Swiss Army knife, with all the tools packed in. Initially, that was so that every master’s and Ph.D. student coming in the door could get straight to the business of answering interesting research questions.

We open-sourced GenX in August 2021 so that we could open up access to others. We received support from ARPA-E [Advanced Research Projects Agency–Energy] to do that. And we’ve been steadily improving it since then. It’s one of several best-in-class electricity-system planning models that are now open source. There’s another called PyPSa that’s getting a lot of use in Europe and elsewhere, one called Switch that came out of Berkeley, and another called GridPath that’s an evolution of Switch.

Getting these models adopted beyond the academic setting presents a lot of challenges. For a proprietary software tool that somebody’s selling under license, they provide training materials and tech support because they want you to find it easy to use, so you keep paying them to use it. You need a similar support ecosystem around an open-source tool. I don’t think it should be a pay-per-license option because that defeats the accessibility of an open-source tool. But there needs to be some infrastructure to support more commercial or public sector uses.

We also need to make it easy to use with an interface and data inputs and processes. We’ve been building a separate open-source tool called Power Genome that pulls together all the public data from the Department of Energy, EPA [U.S. Environmental Protection Agency], EIA, FERC, and others to create all the input data that you need for a power system model. We’re configuring that to plug into all these different open-source planning tools.

The last piece is the computational barrier. We have a big supercomputer here at Princeton. Not everybody has that in their backyard, but cloud computing has become ubiquitous and accessible. So we’re working also on cloud versions of these tools.

Earlier this year, you raised a red flag when Congress ordered up a 2.5-year grid study from the U.S. Department of Energy, which you said would delay crucial action to upgrade the power grid. Why is grid expansion so important?

Jenkins: One reason is that we’re going to need more electricity. Electricity demand is likely to start growing at a pretty sustained rate due to the growth of electric vehicles, AI and data centers, heat pumps, electrification of industry, hydrogen production. You need a bigger grid to supply that electricity.

The second reason is that the grid we have is built out to places where there were coal mines and hydropower dams, not where there’s the best wind and sun. So we need to expand the grid in ways that can tap into the best American resources, particularly wind power. Solar panels convert solar radiation to power linearly, in proportion to the amount of sunlight. But wind turbines convert wind to power at the wind speed cubed. If you double the wind speed, you get 8 times as much wind power output, so a good wind site is way better than a bad wind site.

How would expanding the grid prevent climate-driven disasters like Winter Storm Uri, the ice storm that devastated Texas in February 2021?

Jenkins: Expanding the grid means that when one part of the grid is struggling with an extreme event, it can rely on its neighbors. Expansion also enables wider electricity markets, which tend to lower electricity costs. We’ve seen a steady expansion of regional transmission organizations, and that trend is now spreading into the Western Interconnection [one of North America’s two large AC grids], as several Western utilities are joining the Southwest Power Pool [SPP, a regional grid operator].

Texas, unfortunately, is its own little grid island. The [Electric Reliability Council of Texas] system is not interconnected with the Western and Eastern Interconnections. It can only exchange a few hundreds of megawatts of power with each. So when Texas got hit by Uri, it couldn’t pull power from New Mexico or Colorado or further away in the Eastern Interconnection. They’re on their own, and that’s a much more brittle system. A bigger grid is just better, even if we weren’t facing the need to tap a lot of wind power and to meet electrification needs.

Does anything happening inside or outside of Congress give you hope that the grid will meet the challenge of the climate emergency?

Jenkins: There’s the Big Wires Act that’s been introduced in Congress to set minimum standards for interregional transfer capacity. That’s similar to what Europe has done—basically every country has interties so they can trade energy more effectively and lower costs for consumers.

What makes me optimistic is how quickly the transmission issue has gone from off the radar—aside from the wonky proceedings of regional planning boards—to the top of congressional concern. A year ago, we weren’t even having this conversation.

“The models are helping us understand, on a broad scale, the potential implications of energy-system decision making.” —Jesse Jenkins

And we had a role in that, helping to elevate the importance of transmission expansion to the overall energy transition. The longer you have your sights on a big problem like this, the more likely you’re going to see creative solutions that make progress, whether it’s more serious efforts by regional transmission organizations or state-level policies or the Federal Energy Regulatory Commission [FERC, which regulates the U.S. transmission grid] taking action or Congress finally getting its act together.

High-voltage DC (HVDC) transmission technology is playing a big role in China and Europe. Does HVDC have a role to play in the U.S. grid?

Jenkins: There is a growing effort to create stronger interties between the Eastern and Western Interconnections. SPP in particular is starting to operate markets on both sides of that divide. And we’ve seen private developers like Grid United working on proposals that would cross that seam.

Another example is the Champlain-Hudson Power Express line under construction from Quebec into New York City. It runs underneath Lake Champlain and the Hudson River for most of its route, and it’s HVDC because DC works much better underground and underwater than AC. There was also a need to keep the project out of sight in order to get the permits. Competing projects with overhead lines were rejected. As we see more challenges in siting long-distance lines, we are likely to see more underground transmission.

There’s a company that’s trying to run HVDC transmission under rail lines, where you already have disturbed ground and it’s easier to secure a right of way. Generally, underground lines are something like 10 times more expensive than overhead lines. But if you can’t build the overhead line at all, underground may be the only way to move forward.

The reason Texas is on its own is because they don’t want their power market to be subject to federal regulations. But they could add 10 gigawatts of DC interties to their neighbors without sacrificing that independence. Just the interstate interties would be regulated by FERC. Alas, the Texas legislature is not taking this as seriously as I would have hoped. There have been basically no serious reforms implemented since Uri. They’re just as vulnerable today as they were then.

Besides boosting grid capacity and building out wind and solar, what’s the most important thing that needs to happen to meet our emissions goals and start slowing climate change?

Jenkins: We have to shut down coal plants as fast as is feasible because they’re by far the most environmentally damaging. We have the ability to substitute for them very quickly and affordably. We probably have to maintain all of our existing natural gas capacity. In some parts of the country, we may need to build some new gas plants to maintain reliability alongside a growing share of wind and solar, but we will use their energy less and less. All the things we don’t like about natural gas, whether it’s methane leaks or fracking or air pollution or CO2 emissions, scale with how much gas we burn. So keep the capacity around, but reduce the amount we burn.

And we will maintain the existing nuclear fleet, so that we’re not shutting down low-carbon reactors while we’re trying to displace fossil fuels.

Doing all that will get us to about an 80 percent reduction in emissions from current levels at a very affordable cost. It doesn’t get us to a hundred. The last piece is deploying the full set of what I call “clean firm” technologies that can ultimately replace our reliance on natural gas plants—advanced nuclear, advanced geothermal, carbon capture, biomass, hydrogen, biomethane, and all the other zero-carbon gases that we could use. Those technologies are starting to see their first commercial deployments. We need to be deploying almost all of them at commercial scale this decade, so that they’re ready for large-scale deployment in the 2030s and 2040s, the way we scaled up wind in the 2000s and solar since 2010.

We’re now well on our way to that with the Inflation Reduction Act and other state policies. So I’m pretty encouraged to see the policy framework in place.

An abridged version of this article appears in the December 2023 print issue as “The Transformer.”

- Can the U.S. Grid Work With 100% Renewables? There's a Scientific Fight Brewing ›

- E.U.: Climate-Proof Grids Require More Transparency ›

- How to Prevent Blackouts by Packetizing the Power Grid ›

- This Engineer’s Job Is to Keep Arkansas Nuclear One Safe - IEEE Spectrum ›

Peter Fairley has been tracking energy technologies and their environmental implications globally for over two decades, charting engineering and policy innovations that could slash dependence on fossil fuels and the political forces fighting them. He has been a Contributing Editor with IEEE Spectrum since 2003.