Video Friday is your weekly selection of awesome robotics videos, collected by your friends at IEEE Spectrum robotics. We’ll also be posting a weekly calendar of upcoming robotics events for the next few months; here’s what we have so far (send us your events!):

ICRA 2022: 23–27 May 2022, Philadelphia

ERF 2022: 28–30 June 2022, Rotterdam, Netherlands

CLAWAR 2022: 12–14 September 2022, Açores, Portugal

Let us know if you have suggestions for next week, and enjoy today’s videos.

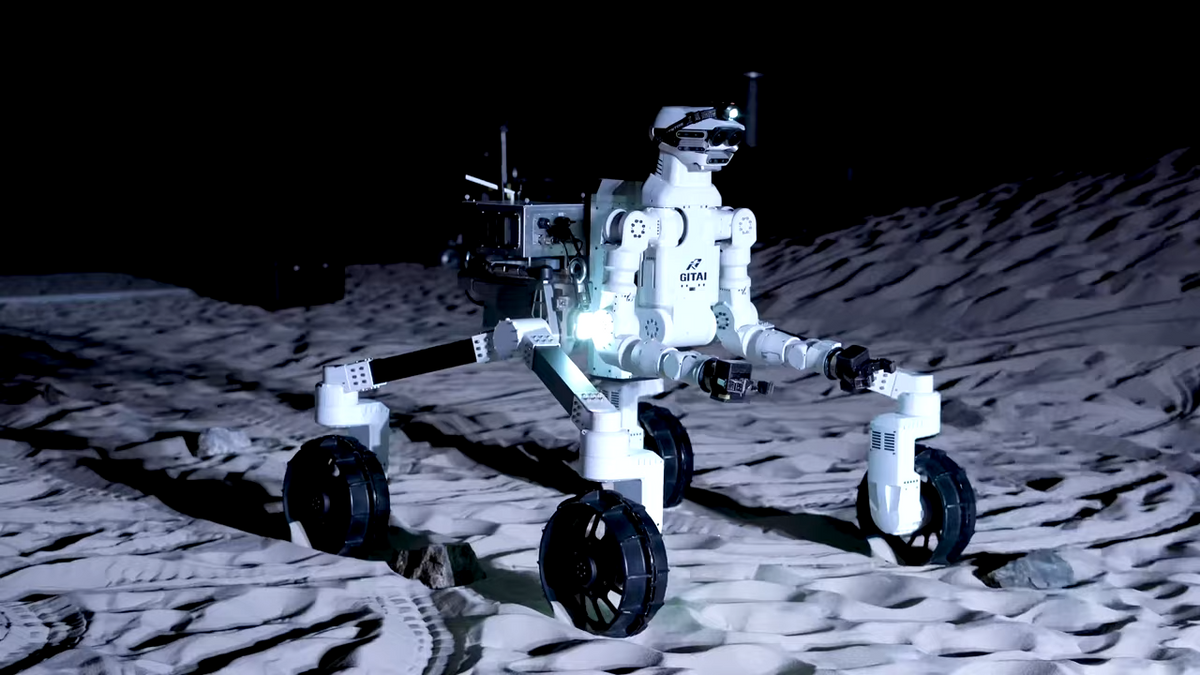

GITAI has developed the advanced lunar robotic rover “R1” that can perform general-purpose tasks on the moon such as exploration, mining, inspection, maintenance, assembly, etc. In December of 2021 at the mock lunar surface environment in JAXA’s Sagamihara Campus, the GITAI lunar robotic rover R1 conducted numerous tasks and mobility operations, successfully completing all planned tests. Here we release a digest video of the demonstration.

This is cool looking, but I’d need to know more about how this system can deal with an actual lunar environment, right? Dust, temperatures, horrible lighting, that sort of thing.

[ Gitai ]

I have questions: Where did Spot put that beer? Why are some robots explicitly shown being remote controlled some of the time? Is this actually the first we’ve seen BD putting manipulators on Atlas? And how much of the footage is composited because otherwise those guys are standing way too close to some actually dangerous robots?

[ Boston Dynamics ]

Some very clever work from the University of Zurich, leveraging event cameras to target 3D sensors on stuff you care about: the parts of the scene that move.

We show that, in natural scenes like autonomous driving and indoor environments, moving edges correspond to less than 10% of the scene on average. Thus our setup requires the sensor to scan only 10% of the scene, which could lead to almost 90% less power consumption by the illumination source.

[ Paper ]

This historic BLACK HAWK flight marks the first time that a UH-60 has flown autonomously and builds on recent demonstrations at the U.S. Army’s Project Convergence 2021. It illustrates how ALIAS-enabled aircraft can help soldiers successfully execute complex missions with selectable levels of autonomy—and with increased safety and reliability.

[ Lockheed Martin ]

The Omnid mobile manipulators are an experimental platform for testing control architectures for (1) autonomous multi-robot cooperative manipulation, and (2) human-collaborative manipulation. This video demonstrates human-collaborative manipulation.

[ Northwestern ]

Based on the behavior of actual cats.

[ Petoi ]

Thanks, Rongzhong!

ElectroVoxel is a cube-based reconfigurable robot that utilizes an electromagnet-based actuation framework to reconfigure in three dimensions via pivoting. To demonstrate this, we develop fully untethered, three-dimensional self-reconfigurable robots and demonstrate 2D and 3D self-reconfiguration using pivot and traversal maneuvers on an air-table and in microgravity on a parabolic flight.

[ MIT CSAIL ]

I’m not sure I’ve seen this particular balancing behavior from Digit demonstrated quite so explicitly.

[ Agility Robotics ] via [ Steve Jurvetson ]

We’ve seen some superimpressive terrain and stairs navigation from quadrupeds recently, so let’s take a minute to remember what state of the art looked like just a few years ago.

What’s normal now was 20x speed back then, just about.

[ RSL ]

The main objective of the HAV project is to research and develop the first Greek Autonomous Vehicle (Level 3+) for both research and commercial purposes.

[ HAV ]

I would pay real money for a 3D autonomous safety geofence for all of my drones if it was able to handle high speeds, dynamic motions, and me screwing up.

[ Paper ]

I really appreciate that iRobot has taken care to design its robots to be repairable by end users with a screwdriver and nothing else.

[ iRobot ]

Semblr is probably the most famous canteen employee in the UK at the moment. That is because the centerpiece of Semblr is a robot, or to be more precise: a KR AGILUS. Two Karakuri employees assist him and fill the ingredients into Semblr’s serving chambers. The KR AGILUS robot arm is responsible for mixing and serving the ingredients.

[ Kuka ]

AutoX RoboTaxi fleet has exceeded 1,000 vehicles. This is a monumental milestone for the company as AutoX’s RoboTaxi fleet is the largest in China. Scaling beyond 1,000 RoboTaxis represents a significant leap forward for larger scale commercialization.

[ AutoX ]

How to knockdown [a] heavy object as a bipedal robot.

[ Vstone ]

Based in the Netherlands, apo.com Group, a leader in automated online pharma, has invested in a fleet of our RightPick piece-picking robots to integrate with its cube storage automation from AutoStore.

It’s interesting what aspects of automation use actual robots, and what don’t.

[ Kuka ]

Trossen Robotics engineers go over their first impressions of AgileX Robotics ROS UGV Rovers and their development kits.

[ Trossen Robotics ]

This week’s CMU RI Seminar is from Matthew Johnson-Roberson, Director of the CMU Robotics Institute, on “Lessons from the Field.”

Mobile robots now deliver vast amounts of sensor data from large unstructured environments. In attempting to process and interpret this data there are many unique challenges in bridging the gap between prerecorded data sets and the field. This talk will present recent work addressing the application of machine learning techniques to mobile robotic perception. We will discuss solutions to the assessment of risk in self-driving vehicles, thermal cameras for object detection and mapping and finally object detection and grasping and manipulation in underwater contexts. Real field data will guide this process and we will show results on deployed field robotic vehicles.

[ CMU RI ]

Evan Ackerman is a senior editor at IEEE Spectrum. Since 2007, he has written over 6,000 articles on robotics and technology. He has a degree in Martian geology and is excellent at playing bagpipes.