Alan Sill, Centre Co-Director of the National Science Foundation Cloud and Autonomic Computing Centre, emphasises why industry standards are essential for reducing carbon emissions in data settings through renewable energy utilisation.

Rapid growth of Artificial Intelligence (AI) and other power-hungry applications for computing and data processing has driven explosive expansion in deployments for large-scale data centre facilities worldwide. The data centre industry is increasingly under pressure to reduce its contributions to global emissions while meeting this demand to provide more processing capability. One solution to meet the simultaneous needs for increased processing with reduced carbon emissions is to increase siting of data centres at or near the locations for renewable energy production.

NSF CAC

The National Science Foundation (NSF) Cloud and Autonomic Computing (CAC) industry/university co-operative research centre is devoted to advanced industry-driven research and development in cloud, distributed, and autonomic computing methods and their application to a broad range of needs for its partners. The overall mission of the CAC Centre is to pursue fundamental research and development in cloud and autonomic computing in collaboration with industry and government. The technical scope of the Centre’s activities includes design and evaluation methods, algorithms, architectures, software, and mathematical foundations for advanced distributed and automated computing systems. Solutions are studied for different levels, including the hardware, networks, storage, middleware, services, and information layers.

The availability of large quantities of renewable energy can break the cost curve for large-scale computing. For large-scale computing centres, energy is a significant fraction of operating cost that can reach approximately the same level as capital investment over the lifetime of the equipment.

Wind power and solar energy are increasingly available in significant quantities, but unlike previous sources of renewable energy such as hydroelectric plants, each has significantly variable availability and cost (sometimes even negative) throughout the day. To make the best use of these energy sources, data centres will need to be highly automated and preferably situated in remote locations near these sources to reduce transmission costs.

The NSF CAC work in data centre automation, analytics, and control standards applies the expertise of leadership with decades of experience in these processes and methods to this problem. Through partnerships with leading global standards developing organisations – such as the Distributed Management Task Force (DMTF), the Storage Networking Industry Association (SNIA), and the Open Grid Forum (OGF) – and working through them to develop, test, and implement standards for adoption with other global organisations such as ISO and its international partners, the CAC has a long track record of producing and influencing standards that achieve global adoption.

Recent projects of the CAC have developed an increasing focus on standards needed to achieve practical adoption of data centre automation, analytics, and control in remote and renewable-energy-powered settings. Working with DMTF, SNIA, and OGF, the CAC has carried out extensive testing for the Redfish and Swordfish standards used to provide such capabilities for computing and storage equipment, for data transfer and operations, and for the electrical management and automation of an increasing range of data centre infrastructure. Such automation and control are crucial to the successful deployment and operation of data centres under the variable energy availability conditions implicit in the use of wind and solar energy and, equally, bring cost-efficiency benefits to other settings.

World-leading facilities

The CAC works closely with Texas Tech University’s (TTU) Global Laboratory for Energy Asset Management and Manufacturing (GLEAMM), which has a core mission of attracting and conducting multidisciplinary research in the energy field with a partnership with federal institutions, state agencies, the private sector, and other universities. The mission of GLEAMM is to verify, validate, and characterise existing technologies used in the energy field, as well as to develop new cutting-edge technologies and make them available to the public through research commercialisation and scientific publications.

GLEAMM has a wide array of capabilities specifically tailored to the research of power grid infrastructure modernisation and renewable energy. The GLEAMM resources include a 150-kW solar array, access to multiple wind turbines at the scale of several hundred kVA each in partnership with General Electric (GE) and Sandia National Laboratories, an array of distributed phasor measurement units (PMUs) to monitor the grid, 1 MW programmable resistive loads, a 187-kVAR programmable load, a 30-kW four-quadrant inverter, a 500-kW diesel generator, an 81.6-kWh energy storage system, and a control building fully instrumented with voltage and current sensors for data acquisition and a local meteorological station. These energy generation and storage capabilities thus span the full range of energy sources likely to be encountered in new data centre deployments.

The GLEAMM microgrid combines the research and commercialisation expertise of Texas Tech University with next-generation industry technologies for protecting, enhancing, and managing electricity transmission and distribution. GLEAMM’s primary goal is to provide a functionally complete infrastructure for innovative studies in different areas related to renewable energy and microgrids, such as modernisation, energy management, power quality, control, and operation.

In addition to these hardware capabilities, GLEAMM also has OPAL-RT ‘hardware-in-the-loop’ (HIL) simulation equipment, which is used to merge computer-based models with hardware devices to study the interactions between the two. In terms of software simulation capabilities, the GLEAMM has licenses for several industry-standard tools, such as PSSE, PSCAD, PowerWorld, Exata CPS, and VOLTRON. The CAC’s simulation and computing resources can be used to supplement the real-world equipment using these resources to model and understand the behaviour of much larger facilities.

These resources and facilities are located at the GLEAMM site at Reese Center just west of Lubbock, Texas. This center is a former Air Force base that now houses a range of academic instructional and research facilities, as well as several industrial tenants, about a 20-minute drive from the Texas Tech main campus. GLEAMM can draw power directly from three 300-kVA peak capacity research wind towers that each generate approximately 200 kW at wind speeds of 11 m/s and a 150-kVA peak capacity solar array on its site at the Reese Center. These wind towers, operated by Sandia National Laboratories, are connected directly to the switch equipment at the GLEAMM Microgrid building, where the control equipment is located. These facilities are also nearby to commercial-grade wind towers operated by General Electric and can access commercial power through lines operated by the South Plains Electric Cooperative (SPEC).

For any given load, resources that need continuous power – for example, to preserve system readiness, manage access and availability, and maintain essential functions – can be kept in operation with external commercial power or our on-site diesel generator for backup as needed. The GLEAMM facilities are extensively instrumented with lab-grade sensors and automation to allow studies to be conducted on practical methods to smooth, regulate, operate, and balance workloads based on local and distributed power availability in a highly instrumented research setting.

Through an Automatic Transfer Switch (ATS), the microgrid can be connected to either the primary grid or to a backup diesel generator, depending on its operational mode. The ATS switches the microgrid operational mode between two possible scenarios. In the most usual scenario called ‘grid-connected mode’, the microgrid is connected to facility power and the diesel generator is off. In rare cases, a second operational mode called ‘island mode’ takes place when the microgrid is disconnected from facility power, and the battery-stored energy and diesel generator become the system’s main source.

The transition between grid-connected to island mode can be planned or unplanned. A planned transition happens when the operator intentionally makes the change, first starting the generator and then reducing the green power generation level. Once the green power generation is reduced and the diesel generator reaches its steady state, the synchronisation with the grid is made and then the ATS switches from the grid to the generator connection. It is important to have the renewable energy generation less than the load level when this takes place because the diesel generator is not able to consume any excess power. Unplanned transitions can occur if there is a lack of energy in the facility grid. When the entire microgrid is off due to a facility outage, the solar plant and wind turbines are isolated, and the diesel generator is automatically started. However, the generator needs a few minutes to reach its steady state and then connect to the system to feed the microgrid in an island mode. During this short interval, the loads are de-energised, but critical and priority loads also switch to battery backup systems to maintain their power supply during such outages.

The GLEAMM microgrid is configured to give priority service to its critical load, such as data centre and computational resources powered by renewable energy. This load is connected to the microgrid through an Outback inverter operated in parallel to a 1600Ah lithium-ion battery that is much like a conventional uninterruptible power system (UPS) to maintain reliability in case of a loss in other sources of energy. Once a loss of power is detected in the input of the Outback device, its inverters automatically isolate the system from the outage and use the battery to supply power to the critical load without noticeable delays or transients. The difference between this and a conventional UPS is in the ability of the combined system to handle multiple input sources of power and manage the switching appropriately.

To connect all these devices in the main microgrid bus (MCC), the facility has several circuit breakers, fuses, and command panels that ensure the system’s protection and safety. The microgrid operation and control are made through an SEL-3530 Real-Time Automation Controller (RTAC) that, with its bi-directional communication and Human-Machine-Interface (HMI), allows the operator to visualise the system’s measurements and send commands back to each device. Furthermore, measurements from the Egauge metre located in different devices allow the microgrid’s observability, data acquisition, and supervision.

Two Phasor Measurement Units (PMUs) are also available in the facility to collect the voltage’s magnitude, frequency, and angle in real time. One of these devices is dedicated to monitoring the entrance and the output in the Outback inverter, ensuring that the critical load is constantly under acceptable power quality levels. Beyond the SEL-3530, all these measurements are sent to a remote interactive platform for data visualisation and a database in which this information is recorded for post-event analysis. In addition to establishing bi-directional communication with all the microgrid elements, the RTAC also contains internal control algorithms developed by previous research projects.

The GLEAMM microgrid devices communication system is configured to support a broad range of industry-standard and customised tests. One of the deployed algorithms is responsible for controlling the five solar inverters, sending set points for their power generation. These set points come from a Maximum Power Point Tracker (MPPT), which looks to maximise the energy available from renewable energy sources, or from a defined value. The user interface set point is a crucial command for island mode operation in the scenario where the solar generation needs to be less than the load, so the generator does not consume the remaining power. Eventually, if the solar generation is higher than the loads in an island mode, the diesel generator’s protection is automatically triggered, avoiding any power consumption by this device.

Another control in the RTAC is the Outback battery manager. With the measurements collected in the RTAC, calculations are made to track the total amount of power generated and consumed by the microgrid. Whenever generation is higher than consumption, the algorithm sends a command to the Outback inverter to connect to the microgrid, powering the critical loads and charging the battery. Once the generation is less than the consumption, the RTAC directs the Outback to disconnect the microgrid and use the battery to maintain power to the critical load. With this control, we can store the excess energy generated and use it during the time when we do not have enough renewable energy production. The battery discharges until a predetermined level, so it keeps safe storage for any further lack of energy and protects the device from a deep discharge. When this minimum level is reached, the RTAC controller asks the Outback to use the microgrid power again.

Supplementing the physical infrastructure, the GLEAMM facility incorporates a Real-Time Digital Simulator from OPAL-RT Company, in which the entire microgrid and its devices are modelled as a fully simulated system. This simulator can be used to model real electrical systems with high reliability and to track the behaviour of real electrical devices through its real-time simulation. With these facilities and the full microgrid model, GLEAMM controls can be tested and validated in a virtual environment without concern for compromise of the real electrical system under a wide variety of projected operating conditions and used to model the behaviour of much larger facilities likely to be used in industrial data centre settings.

Data centre instrumentation and visualisation

Adding to the above resources for energy generation, storage, and instrumentation, the CAC also brings extensive experience developed over decades of operation of data centre computing, storage, networking, and distributed operations. The CAC has advanced capabilities in these areas.

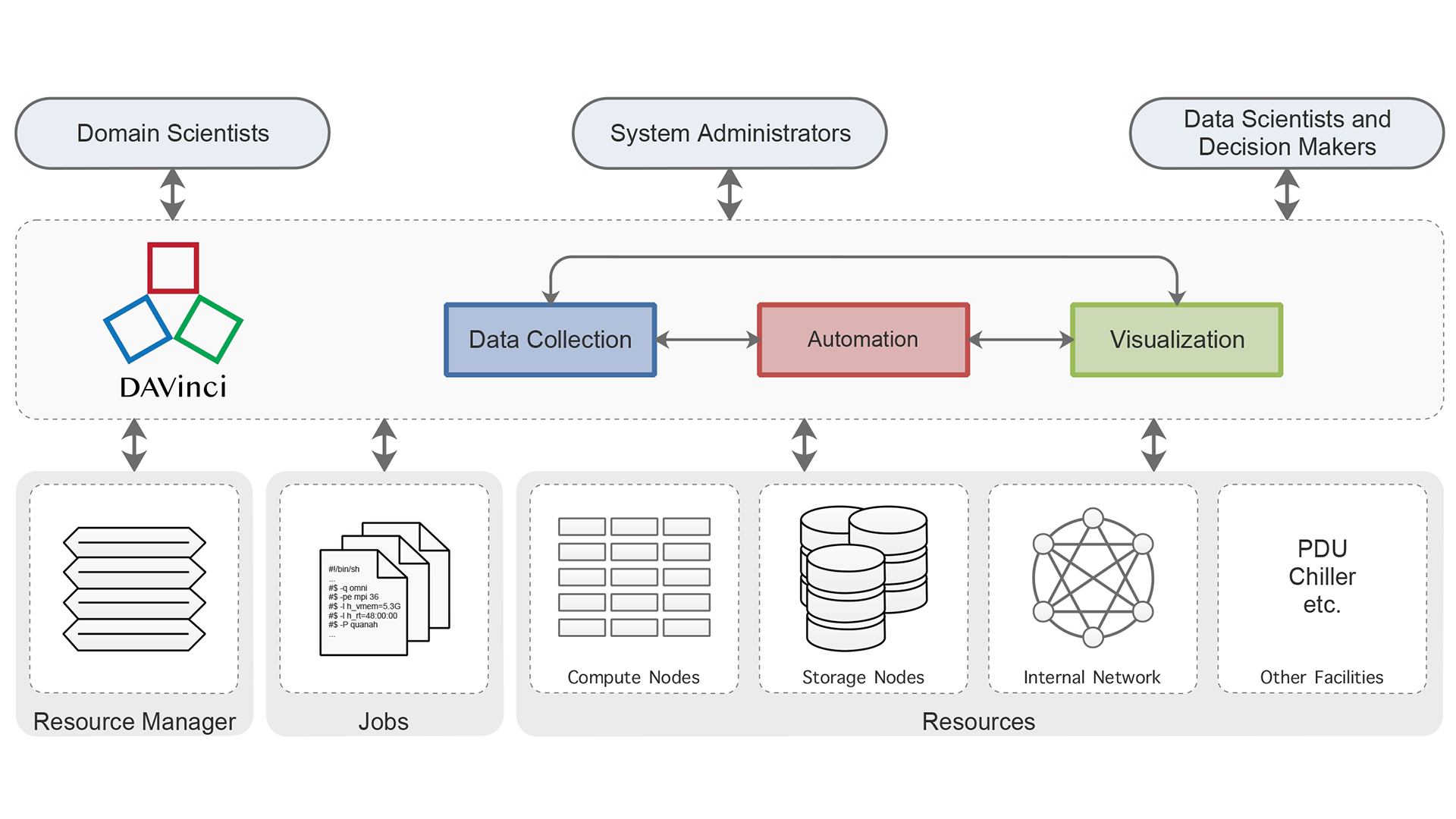

The CAC has developed the DAVinci™ instrumentation and automation suite of tools that bring an integrated approach to the widely disparate needs for analytics in data centre settings. DAVinci is different from previous data collection, automation, and visualisation tools in that it incorporates a holistic end-to-end design that optimises data gathering, flow, and efficiency. While it partially overlaps with existing monitoring or visualisation solutions, such as those discussed below, none of the previous tools provide an integrated framework and coupled set of tool suites in the ways that DAVinci does.

Previous data collection infrastructures include separate IPMI-based server lights-out hardware management, Redfish-based improvements on these tools, SNMP tools, and tools to retrieve server System Event Log (SEL) data. A wide variety of device-specific protocols exist for managing and controlling hardware devices, such as dedicated sensor and control systems, rack and room power distribution units (PDUs), and other custom data centre equipment. Many of these can now be gathered into the generalised Redfish data centre automation and management protocol through customised schemas designed for this purpose.

DAVinci leverages the Redfish protocol and tools developed and tested by the CAC in gathering the status of resources. This system makes full use of telemetry metrics definition and collection capabilities and server-side aggregated push data transmission features of Redfish to optimise and customise data collection.

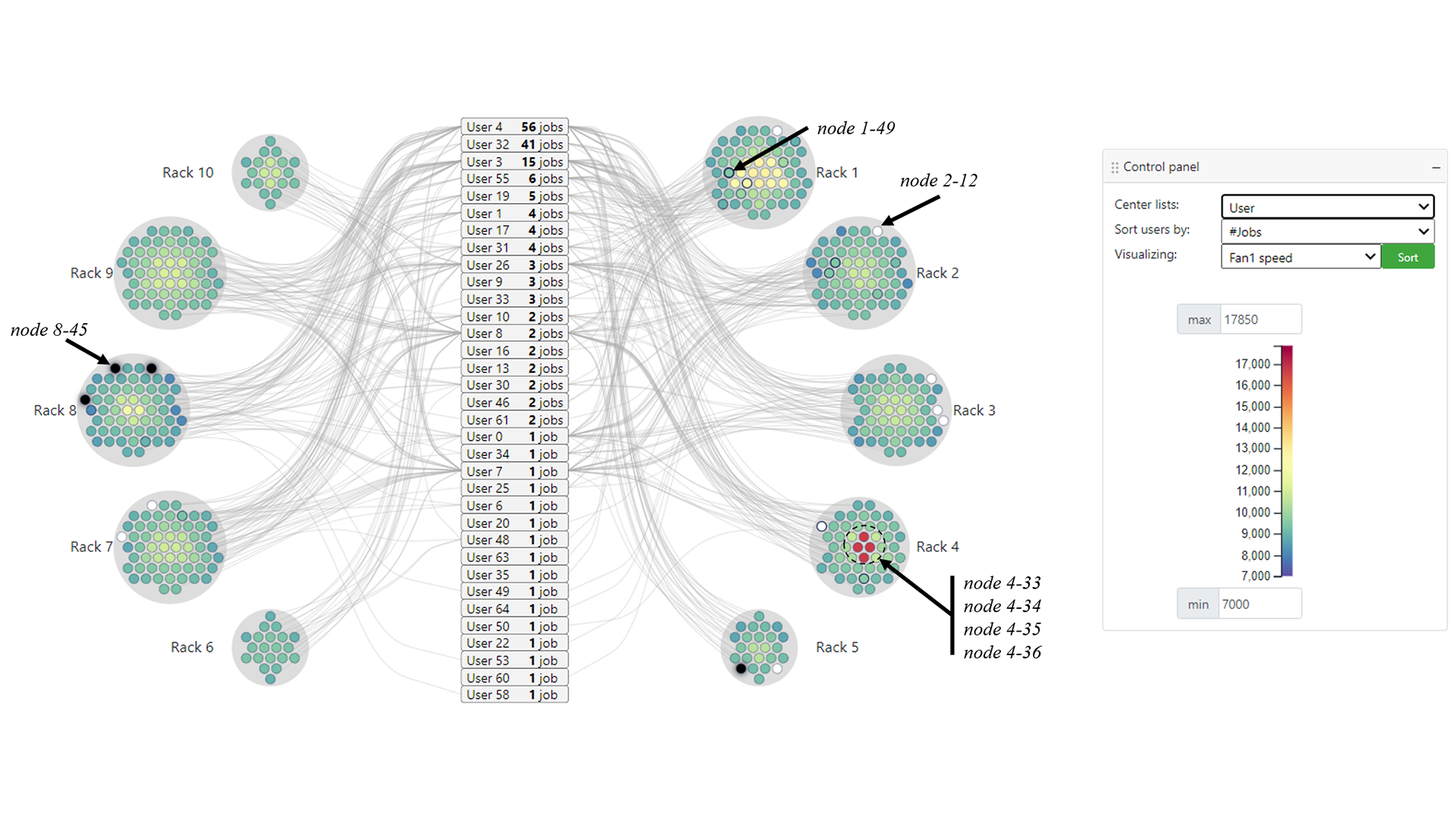

The visualisation component of DAVinci is built on top of the data collection component to provide interactive visual representations for situational awareness and monitoring of HPC systems. The visualisation requirements are expanded on the following dimensions: HPC spatial layout (physical location of resources in the system), temporal domain (as described in the metrics collector), and resource metrics (such as CPU temperature, fan speed, power consumption, etc.).

The visualisation component provides spatial and temporal views across nodes, racks, and other facilities and allows DAVinci users to filter by time-series features, such as sudden changes in temperatures for system troubleshooting. It also allows for the correlation of job and resource metrics via multidimensional analysis and integrates with the automation component so that the characterisation and predictive analysis results of HPC systems using Machine Learning techniques can be achieved.

Through its partnerships with international standards organisations, industry members, and government agencies throughout the world, the CAC is well positioned to help your organisation make the necessary steps to achieve a practical reduction of emissions while deploying much larger scale computational business capabilities to meet the growing challenges of Artificial Intelligence, data centre operations, and data processing in the modern world. Contact information is below.

Please note, this article will also appear in the fifteenth edition of our quarterly publication.

This blog on “Leveraging Renewable Energy to Reduce Data Centre Emissions” is both timely and enlightening. It’s heartening to see the tech industry taking proactive steps to reduce its carbon footprint, and the discussion here underscores the critical role renewable energy plays in achieving this goal. The insights provided are not only informative but also encourage hope for a more sustainable future in the realm of data centers and IT infrastructure. Kudos to the author for highlighting this essential aspect of the renewable energy transition!